Elasticsearch provider module

Installing with Maven

Maven is the easiest way to install the module. Add the following to your bundle:

<dependency>

<groupId>info.magnolia.elasticsearch</groupId>

<artifactId>magnolia-query-manager</artifactId>

<version>1.0.1</version>

</dependency>

<dependency>

<groupId>info.magnolia.elasticsearch</groupId>

<artifactId>magnolia-es-content-delivery</artifactId>

<version>1.0.1</version>

</dependency>Elasticsearch

brew install elasticsearch

brew services start elasticsearch

brew install logstash

brew services start logstash

brew install kibana

brew services start kibana-

Download the Binaries https://www.elastic.co/downloads/elasticsearch

-

Download the Binaries for Kibana https://www.elastic.co/downloads/kibana

-

Follow the installation steps on the page previously listed.

-

Run

bin/elasticsearchfrom your command line.

Set up Elasticsearch cluster

-

Copy the config directory from /config to new folder, i.e.

<path-to-installation>/configurations/001/config. Brew installations, the file is located at:/usr/local/etc/elasticsearch. -

Uncomment and modify the following in

elasticsearch.yml:cluster.name: magnolia-cluster http.cors: enabled: true allow-origin: "*" -

If you’re using multiple configuration, you’ll also need to uncomment and modify the following in

elasticsearch.yml:path.data: <path-to-installation>/data path.logs: <path-to-installation>/logs http.port: 9201 # Or whatever unique port you prefer. export ES_PATH_CONF=<path-to-installation>/config

| You can validate both servers are running by opening a browser and going to http://localhost:9200 (along with the other node ports you may have configured) and you will see a JSON response in the magnolia-cluster. |

Usage

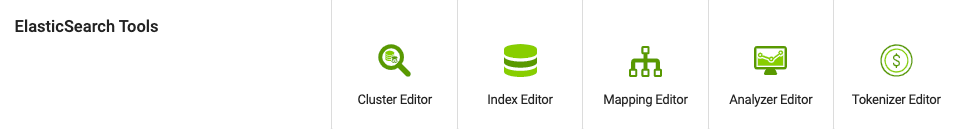

There are multiple apps that are available to build a proper index with the appropriate search analyzers and tokenizers.

-

Cluster Editor app: Configure a cluster and its server nodes.

-

Index Editor app: Define the index and provide the source data locations.

-

Mapping Editor app: Map the properties with the data types and how they should be mapped (including the applied analysers to each field).

| Individual analyzers and tokenizers can be created using the Analyzer and Tokenizer Editor respectively. |

Tokenizers

A tokenizer receives a stream of characters, breaks it up into individual tokens (usually individual words), and outputs a stream of tokens. For instance, a whitespace tokenizer breaks text into tokens whenever it sees any whitespace. It would convert the text "Quick brown fox!" into the terms [Quick, brown, fox!].

See here for more details.

The Edge NGram tokenizer will break down text into words when it encounters a list of specified terms. The expected result of using such a tokenizer is answering the following situation: Given a sequence of letters, what is the likelihood of the next letter.

-

From the JCR browser, the query manager workspace, import:

elasticsearch-provider/magnolia-es-configuration/src/main/resources/mgnl-bootstrap-manual/elastic-configuration/query-manager.tokenizers.xml. -

Make sure to remove the empty "tokenizers" content node.

Name = edge_ngram_tokenizer Type = edge_ngram Min Gram = 2 Max Gram = 5 Token Chars = Letter

Analyzers

Text analysis is the process of converting text, like the body of any email, into tokens or terms which are added to the inverted index for searching. Analysis is performed by an analyzer which can be either a built-in analyzer or a custom analyzer defined per index.

See here for more details.

The HTML analyzer below can be automatically applied to mapped fields when the field is assumed to be of HTML text.

-

From the JCR browser, in the query manager workspace, import:

elasticsearch-provider/magnolia-es-configuration/src/main/resources/mgnl-bootstrap-manual/elastic-configuration/query-manager.analyzers.xml. -

Make sure to remove the empty "analyzers" content node.

Here are four commonly used analyzers that you can apply to your search index fields.

| Analyzer | Overview | Sample |

|---|---|---|

Edge NGram Analyzer |

Useful for suggestion and autocomplete |

This analyzer will use the a default tokenizer that takes in search terms and applies the terms in lower-case (making a more efficient search). Fields: Name = edge_ngram_search_analyzer Tokenizer = lowercase Type = standard |

Keyword Analyzer |

Searches via keywords. |

This analyzer is similar to the previous, but applies the tokenizer created in the earlier section. Fields: Name = edge_ngram_analyzer Tokenizer = edge_ngram_tokenizer Filters = Lower Case Type = standard Default Analyzer? = selected Name = edgengram Type = text Search Analyzer = edge_ngram_search_analyzer |

Keyword HTML Analyzer |

Tokenizes out HTML text, and provides clean keyword searching. |

This analyzer uses keyword analysis against text that is in the form of HTML. Basically, the text is indexed in a way that search analysis against it will be accomplished with it’s HTML parts stripped out. Fields: Name = keyword_html_analyzer Tokenizer = keyword Filters = Lower Case, ASCII Folding, and Trim Character Filters = HTML Strip Type = custom |

Edge NGram Search Analyzer |

Same as above, but provided with different name to distinguish for future modifications. |

This analyzer is similar to the previous, with the exception that it is applied to text without HTML tags. Fields: Name = keyword_analyzer Tokenizer = keyword Filters = Lower Case, ASCII Folding, and Trim Type = custom HTML Alternative for = keyword_html_analyzer Default Analyzer? = selected Name = completion Type = completion This analyzer and the next are interchangeable. The system will automatically detect if the text value is of type "HTML", and automatically apply the next analyzer in place, as defined by the "HTML Alternative for:" field. |